Assessment of In-game Scenes and Forecasting of Overwhelming Player Emotions

In a groundbreaking development, researchers have unveiled EMOGRAPH, a multimodal emotion recognition framework designed to predict player emotions during gameplay. This innovative system, put to the test in the emotionally intense horror game Outlast, combines various physiological and behavioral data streams to deliver a nuanced understanding of players' emotional responses.

Eye Movements, Facial Expressions, Questionnaire Responses, and Brain Wave Activity

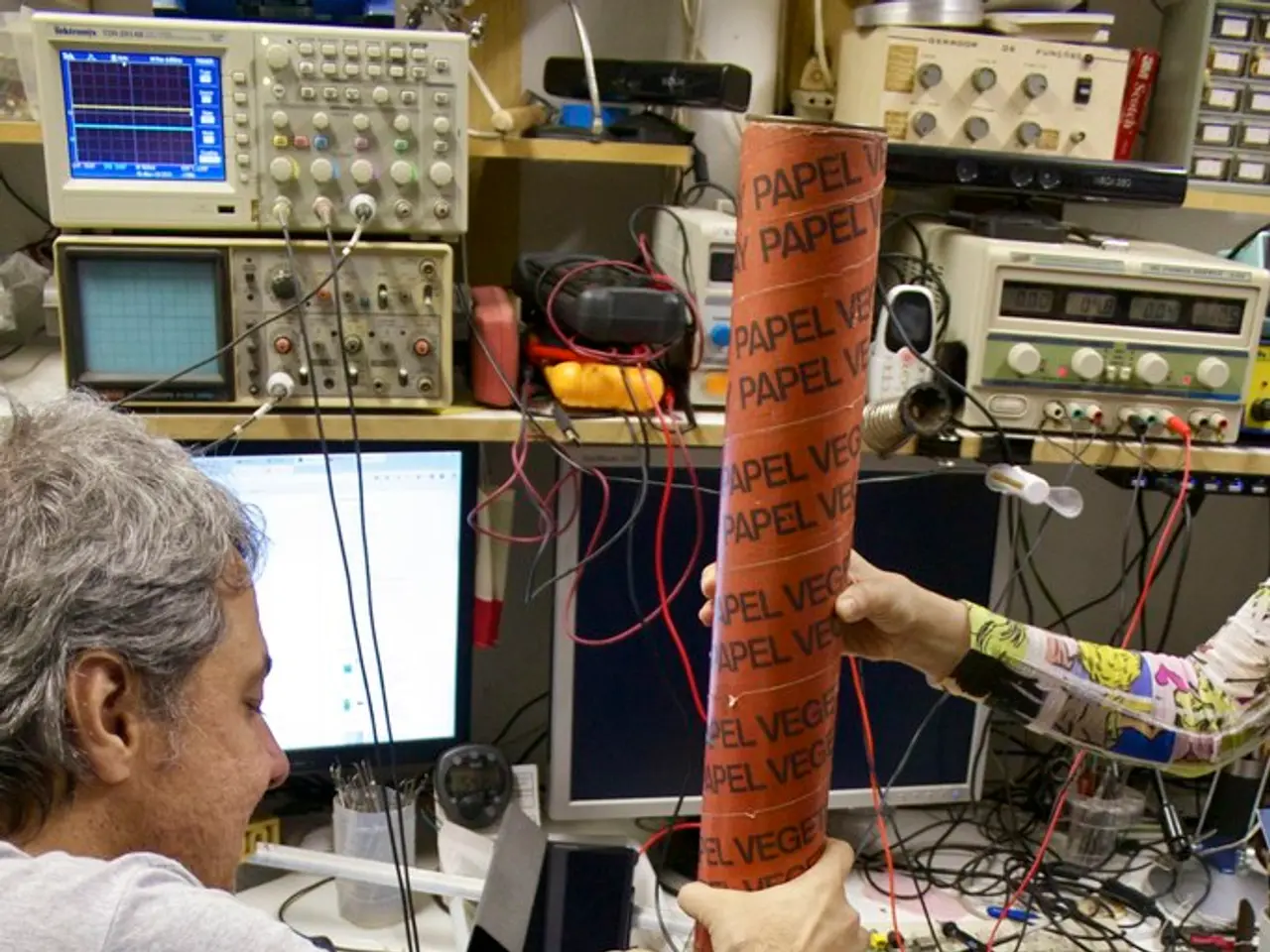

At the heart of EMOGRAPH lies a sophisticated integration of four key data sources: eye movements, facial expressions, questionnaire responses, and brain wave activity (EEG).

- Tracking eye movements provides insight into visual attention and arousal levels, with rapid eye movements or widened pupils indicating fear or heightened alertness.

- Facial expression analysis, achieved through computer vision techniques, recognizes emotion-related expressions like fear, surprise, or disgust by analysing players’ facial muscle movements.

- Self-reported emotional states gathered via in-game or post-game surveys offer subjective data about the player’s feelings, helping to validate and calibrate the predictive model.

- EEG sensors measure electrical activity in the brain, capturing patterns associated with emotional states like stress, engagement, or fear.

Data Fusion, Feature Extraction, and Machine Learning

EMOGRAPH fuses these multimodal signals in a synchronized manner to create a rich emotional profile, ensuring that eye movement, facial expression changes, EEG signals, and self-reports correspond to the same gameplay moments.

Relevant features are then extracted from each modality, such as fixation duration, facial Action Units, and EEG frequency bands. These features are normalized and combined into a unified feature vector.

Machine learning algorithms, such as Support Vector Machines, neural networks, or ensemble methods, are employed to map the integrated features to predicted emotional states.

Validation, Adaptation, and a Future in Game Design

The incorporation of subjective questionnaire data helps validate model predictions and fine-tune the system to individual differences, improving accuracy for the intense emotional context of a horror game like Outlast.

The results of this experiment show promise in identifying emotions and their triggers, suggesting that EMOGRAPH could be a valuable tool for game designers seeking to create emotionally engaging experiences for players.

By providing insights into user experience and predicting players' emotions, EMOGRAPH could widen possibilities in game design, offering a solution for computer-assisted emotional analysis of game sessions.

- Leveraging advanced artificial-intelligence techniques, EMOGRAPH could potentially expand to include eye tracking technology, analyzing the dynamics of players' gaze movements during gameplay for a more comprehensive understanding of their emotional states.

- The integration of eye tracking technology with EMOGRAPH's current array of data sources, like facial expressions, questionnaire responses, and brain wave activity, could lead to an even more nuanced and accurate prediction of player emotions, resulting in a more immersive and engaging gaming experience.